Cloudera Manager HA 搭建流程整理

简介

Cloudera Manager 有以下五个部分:

Cloudera Manager Server

Cloudera Management Service

Activity Monitor

Alert Publisher

Event Server

Host Monitor

Service Monitor

Reports Manager

Cloudera Navigator Audit Server

关系型数据库

文件存储

Cloudera Manager Agent

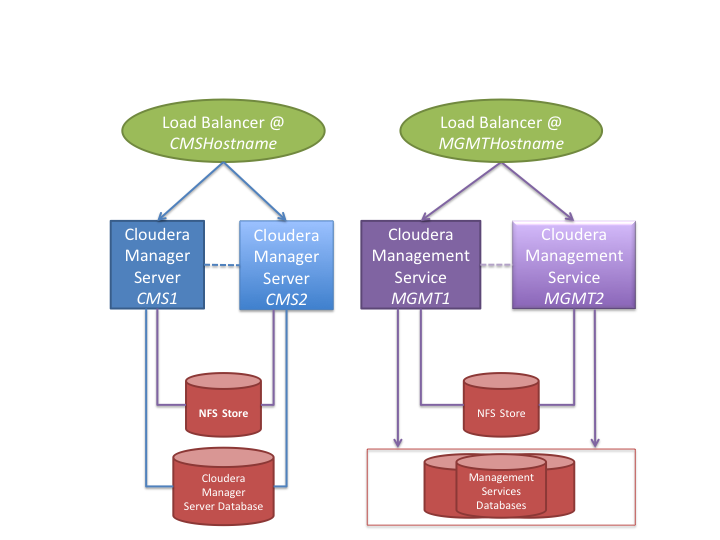

注:目前 Cloudera Manager 的 HA 方式是采用负载均衡器实现的且目前仅支持主备的HA方案,并不支持双活。HAProxy 和 NFS 等服务的 HA 在 Cloudera 官方文档中并未进行说明。

为了实现 HA 的效果需要如下三种服务

HAProxy (端口转发)

NFS(文件同步)

Pacemaker, Corosync (故障转移)

注意事项:

不要在现有 CDH 集群中的任何主机上托管 Cloudera Manager 或 Cloudera Management Service 角色,因为这会使故障转移配置变得复杂,并且重叠的故障域可能导致故障和错误跟踪的问题。

采用相同配置的硬件设备部署主备服务。这样做能确保故障转移不会导致性能下降。

为主机和备机配置不同的电源和网络,这样做能限制重叠的故障域。

其中 Cloudera Management Service 所在设备会有 Hostname 修改,CDH 集群会无法对其进行管理故不能安装任何大数据组件。

本次部署时的 NFS 服务不能搭建在 MGMT 主机上。

为了方便说明,下面将采用如下所示的简写

简写

主机

CMS

CMSHostname

MGMT

MGMTHostname

NFS

NFS Store

DB

Databases

如果在配置过程中遇到了问题请参阅 官方文档

前置准备

如果之前已经安装了 CDH 集群则需要在界面上停止集群并且使用如下命令关闭集群和服务。

1 2 systemctl stop cloudera-scm-server

并且在新部署的设备上执行如下命令:

1 hostnamectl set-hostname <hostname>

或者使用 DNS 。

1 vim /etc/sysconfig/network

1 2 systemctl stop firewallddisable firewalld

1 2 setenforce 0

将状态设置为 disable

1 2 3 yum install -y ntpenable ntpd

1 2 sysctl vm.swappiness=10echo "\nvm.swappiness=10" > /etc/sysctl.conf

1 2 3 echo never > /sys/kernel/mm/transparent_hugepage/defragecho never > /sys/kernel/mm/transparent_hugepage/enabledecho "\necho never > /sys/kernel/mm/transparent_hugepage/defrag\necho never > /sys/kernel/mm/transparent_hugepage/enabled\n" > /etc/rc.local

1 2 yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

1 2 3 4 5 wget https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.46.tar.gzmkdir -p /usr/share/java/cd mysql-connector-java-5.1.46cp mysql-connector-java-5.1.46-bin.jar /usr/share/java/mysql-connector-java.jar

1 2 wget https://archive.cloudera.com/cm6/6.3.1/redhat7/yum/cloudera-manager.repo -P /etc/yum.repos.d/

CMS 配置

1 vim /etc/haproxy/haproxy.cfg

在服务检测部分有转发限定的相关的配置,为了方便测试可以通过注释下面的内容来关闭此项检测

1 option forwardfor except 127.0.0.0/8

在服务安装完成后内部存在默认样例,在实际环境中可以注释如下内容关闭此样例

1 2 3 4 5 6 frontend main *:5001

1 2 3 backend static

1 2 3 4 5 6 backend app

1 2 setenforce 0

1 setsebool -P haproxy_connect_any=1

1 vim /etc/haproxy/haproxy.cnf

Cloudera Manager Server 端口转发配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 listen cmf :7180

1 haproxy -f /etc/haproxy/haproxy.cfg -c

1 systemctl enable haproxy --now

MGMT 配置

1 vim /etc/haproxy/haproxy.cfg

在服务检测部分有转发限定的相关的配置,为了方便测试可以通过注释下面的内容来关闭此项检测

1 option forwardfor except 127.0.0.0/8

在服务安装完成后内部存在默认样例,在实际环境中可以注释如下内容关闭此样例

1 2 3 4 5 6 frontend main *:5001

1 2 3 backend static

1 2 3 4 5 6 backend app

1 2 setenforce 0

1 setsebool -P haproxy_connect_any=1

1 vim /etc/haproxy/haproxy.cfg

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 listen am1 :8087

1 2 3 4 5 listen ap1 :10101

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 listen es1 :7184

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 listen hm1 :8091

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 listen sm1 :8086

Cloudera Manager Agent 端口转发配置

1 2 3 4 5 listen mgmt-agent :9000

1 2 3 4 5 6 7 8 9 10 listen rm1 :5678

Cloudera Navigator Audit Server 端口转发配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 listen cn1 :7186

1 haproxy -f /etc/haproxy/haproxy.cfg -c

1 systemctl enable haproxy --now

1 systemctl status haproxy

NFS 配置

注:NFS 设备最好搭建在 CDH 集群之外,方便后期维护。

1 yum install -y nfs-utils

1 2 3 4 5 6 7 8 9 mkdir -p /home/cloudera-scm-servermkdir -p /home/cloudera-host-monitormkdir -p /home/cloudera-scm-agentmkdir -p /home/cloudera-scm-eventservermkdir -p /home/cloudera-scm-headlampmkdir -p /home/cloudera-service-monitormkdir -p /home/cloudera-scm-navigatormkdir -p /home/etc-cloudera-scm-agentmkdir -p /home/cloudera-scm-csd

1 vim /etc/exports.d/cloudera-manager.exports

填入如下内容

1 2 3 4 5 6 7 8 9 /home/cloudera-scm-server *(rw,sync,no_root_squash,no_subtree_check)

1 2 3 4 5 6 firewall-cmd --permanent --add-service=mountdenable rpcbind --nowenable nfs --now

若显示的出上文中配置的文件夹则证明服务运行正常,如果遇到问题可以试试下面的命令。

Cloudera Manager Server 偏移测试

在完成上述内容后就可以进行 Cloudera Manager Server 偏移测试了。

千万注意主备不能同时启动,如果主备同时启动可能损坏数据库

CMS 1 配置(主)

若 CMS 1 之前已经安装过了 Cloudera Manager Server 则可以跳过此步骤。

1 2 yum install -y cloudera-manager-daemons cloudera-manager-agent cloudera-manager-server

1 yum install -y nfs-utils

若 CMS 1 没有装过 Cloudera Manager Server 则可以执行如下命令将配置文件备份传输至 NFS 服务器。

1 scp -r /var/lib/cloudera-scm-server/* <user>@<NFS>:/home/cloudera-scm-server/

注:如果 CDH 集群中安装过扩展 Parcels 则需要同步主备两台设备上的 csd 授权文件。具体位置位于 Administration > Settings > Custom Service Descriptors > Local Descriptor Repository Path。

1 2 rm -rf /var/lib/cloudera-scm-servermkdir -p /var/lib/cloudera-scm-server

1 mount -t nfs <NFS>:/home/cloudera-scm-server /var/lib/cloudera-scm-server

填入如下内容

1 <NFS>:/home/cloudera-scm-server /var/lib/cloudera-scm-server nfs auto,noatime,nolock,intr,tcp,actimeo=1800 0 0

1 systemctl start cloudera-scm-server

访问 http://<CMS1>:7180 查看服务运行情况

进入 Administration->Settings->Category->Security 配置中

关闭 HTTP Referer Check 属性

访问 http://<CMS>:7180 查看服务运行情况

1 systemctl stop cloudera-scm-server

CMS 2 配置(备)

1 yum install -y cloudera-manager-daemons cloudera-manager-agent cloudera-manager-server

1 yum install -y nfs-utils

1 2 rm -rf /var/lib/cloudera-scm-servermkdir -p /var/lib/cloudera-scm-server

1 mount -t nfs <NFS>:/home/cloudera-scm-server /var/lib/cloudera-scm-server

填入如下内容

1 <NFS>:/home/cloudera-scm-server /var/lib/cloudera-scm-server nfs auto,noatime,nolock,intr,tcp,actimeo=1800 0 0

1 2 mkdir -p /etc/cloudera-scm-server

1 systemctl disable cloudera-scm-server

1 systemctl start cloudera-scm-server

访问 http://CMS2:7180 查看服务运行情况

访问 http://CMS:7180 查看服务运行情况

1 systemctl stop cloudera-scm-server

在备机关闭完成后,需要返回至主机,开启主机 上的 cloudera-scm-server 服务

1 systemctl start cloudera-scm-server

Agent 配置

修改 CDH 集群中除了 MGMT1,MGMT2,CMS1,CMS2 设备上的所有 Agent 配置

1 vim /etc/cloudera-scm-agent/config.ini

修改如下配置项:

1 systemctl restart cloudera-scm-agent

Cloudera Mangement Service 偏移测试

由于 Cloudera Management Service 当中存储的内容没什么用,所以此处采用了删除重新安装的方式。

MGMT 1 配置

1 yum install -y cloudera-manager-daemons cloudera-manager-agent

1 scp -R /etc/cloudera-scm-agent <user>@<NFS>:/home/etc-cloudera-scm-agent

1 yum install -y nfs-utils

1 2 3 4 5 6 7 mkdir -p /var/lib/cloudera-host-monitormkdir -p /var/lib/cloudera-scm-agentmkdir -p /var/lib/cloudera-scm-eventservermkdir -p /var/lib/cloudera-scm-headlampmkdir -p /var/lib/cloudera-service-monitormkdir -p /var/lib/cloudera-scm-navigatormkdir -p /etc/cloudera-scm-agent

1 2 3 4 5 6 7 mount -t nfs <NFS>:/home/cloudera-host-monitor /var/lib/cloudera-host-monitor

填入如下内容

1 2 3 4 5 6 7 <NFS>:/home/cloudera-host-monitor /var/lib/cloudera-host-monitor nfs auto,noatime,nolock,intr,tcp,actimeo=1800 0 0

1 vim /etc/cloudera-scm-agent/config.ini

修改如下配置项

1 2 server_host=<CMS>

新增如下内容

若目标地址 IP 与 MGMT1_IP(本机 IP) 相同则证明配置无误。

注:此处需要和 MGMT1 完成统一配置且与两台设备的其他服务不冲突。

检查设备上cloudera-scm用户的 UID 和 GID

配置设备上的 UID 和 GID

1 2 usermod -u <UID> cloudera-scm

检查之前用户的遗留文件夹

1 find / -user <UID_Origin> -type d

切换权限至当前用户

1 chown -R cloudear-scm:cloudear-scm <dir >

1 2 3 4 5 6 chown -R cloudera-scm:cloudera-scm /var/lib/cloudera-scm-eventserverchown -R cloudera-scm:cloudera-scm /var/lib/cloudera-scm-navigatorchown -R cloudera-scm:cloudera-scm /var/lib/cloudera-service-monitorchown -R cloudera-scm:cloudera-scm /var/lib/cloudera-host-monitorchown -R cloudera-scm:cloudera-scm /var/lib/cloudera-scm-agentchown -R cloudera-scm:cloudera-scm /var/lib/cloudera-scm-headlamp

1 systemctl restart cloudera-scm-agent

访问 http://<CMS>:7180 查看主机列表,若 <MGMT> 出现在列表中则证明安装成功。

在页面当中删除 Cloudera Management Service 服务,然后点击右上角的 Add 按钮在新设备上安装服务

关闭 MGMT系列设备上的 Hostname 检查

由于此处采用了 Hosts 文件完成了解析所以需要根据页面上的红色提示关闭 Hostname 检查

在安装完成后需要开启集群和Cloudera Management Service 服务进行检查。

在界面上关闭集群和 Cloudera Management Service 服务

1 systemctl stop cloudera-scm-agent

MGMT 2 配置

1 yum install -y cloudera-manager-daemons cloudera-manager-agent

1 yum install -y nfs-utils

1 2 3 4 5 6 7 mount -t nfs <NFS>:/home/cloudera-host-monitor /var/lib/cloudera-host-monitor

填入如下内容

1 2 3 4 5 6 7 <NFS>:/home/cloudera-host-monitor /var/lib/cloudera-host-monitor nfs auto,noatime,nolock,intr,tcp,actimeo=1800 0 0

1 vim /etc/cloudera-scm-agent/config.ini

修改如下配置项

1 2 server_host=<CMS>

新增如下内容

若目标地址 IP 与 MGMT2_IP(本机 IP) 相同则证明配置无误。

注:此处需要和 MGMT1 完成统一配置且与两台设备的其他服务不冲突。

检查设备上cloudera-scm用户的 UID 和 GID

配置设备上的 UID 和 GID

1 2 usermod -u <UID> cloudera-scm

1 systemctl start cloudera-scm-agent

注:如果因为用户修改的情况造成启动失败,可以尝试重启设备。

访问 http://<CMS>:7180 查看主机列表,若 <MGMT> 出现在列表中并且 IP 地址变为了 MGMT2_IP则证明安装成功。

在页面上启动 Cloudera Management Service 服务,然后在本机执行下列语句查看是否服务运行在本机上。

在页面上关闭服务,然后输入如下命令关闭备机上的所有服务。

1 systemctl stop cloudera-scm-agent

在备机关闭完成后,需要返回至主机,开启主机 上的 cloudera-scm-agent 服务

1 systemctl start cloudera-scm-agent

故障检测配置

在 CMS1,CMS2,MGMT1,MGMT2 四台设备上都需要执行下面的命令:

1 2 wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-7/network:ha-clustering:Stable.repo -P /etc/yum.repos.d/

如果存在 /etc/default/corosync 文件则需要修改如下配置项

1 systemctl disable corosync

CMS1 和 CMS 2

1 vim /etc/corosync/corosync.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 totem {

1 vim /etc/rc.d/init.d/cloudera-scm-server

填入如下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 #!/bin/bash

1 chmod 755 /etc/rc.d/init.d/cloudera-scm-server

1 2 systemctl start corosync

关闭 cloudera-scm-server 服务自启动和服务

1 2 systemctl disable cloudera-scm-server

1 2 systemctl start pacemakerenable pacemaker

若 CMS1 和 CMS2 都处在节点列表中则证明配置正确。

1 2 3 crm configure property no-quorum-policy=ignorefalse

将 cloudera-scm-server 进行托管

1 crm configure primitive cloudera-scm-server lsb:cloudera-scm-server

注:此处在第二台设备中运行此命令时提示任务已经存在。

1 crm resource start cloudera-scm-server

然后访问 http://<CMS>:7180 检查服务运行状态。

1 crm resource move cloudera-scm-server <CMS2>

然后访问 http://<CMS>:7180 检查服务运行状态。

注:在迁移完成后建议到备机上检测服务运行状态,确保服务运行正常。

MGMT1 和 MGMT2

1 vim /etc/corosync/corosync.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 totem {

1 vim /etc/rc.d/init.d/cloudera-scm-agent

填入如下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 #!/bin/bash

1 chmod 755 /etc/rc.d/init.d/cloudera-scm-agent

1 2 systemctl start corosyncenable corosync

关闭 cloudera-scm-agent 服务自启动和服务

1 2 systemctl disable cloudera-scm-agent

1 2 systemctl start pacemakerenable pacemaker

若 MGMT1 和 MGMT2 都处在节点列表中则证明配置正确。

1 2 3 crm configure property no-quorum-policy=ignorefalse

创建 OCF( Open Cluster Framework)

1 mkdir -p /usr/lib/ocf/resource.d/cm

1 vim /usr/lib/ocf/resource.d/cm/agent

填入如下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 #!/bin/sh

1 chmod 770 /usr/lib/ocf/resource.d/cm/agent

将 cloudera-scm-server 进行托管

1 crm configure primitive cloudera-scm-agent ocf:cm:agent

1 crm resource start cloudera-scm-agent

1 crm resource move cloudera-scm-agent <MGMT2>

常见问题

crm 权限不足

如果出现这样的问题可以再次修改 crm 所需文件的权限,然后使用如下命令:

1 crm resource cleanup <service>

然后重新管理服务即可

1 crm resource <option> <service>